I was working on a progressive web app recently and wanted to make it appear as fast as possible. This meant that I wanted to have data cached so I could show it to users without waiting for a server response. I also expect users will not always have the best wifi connection when using this app. That makes caching even more important.

I’m not going to go into detail on setting up a pwa. I will give some background so developers unfamiliar with the topic can follow. The examples in the post will assume a javascript frontend and a C# backend. They’re minimal so the languages aren’t that important. They will be in the context of an application wanting to get a list of movies from the server.

Service Workers

Service workers are what make progressive web apps work. They function as a proxy between your application and your server. Your application continues to make requests like normal. The service worker has a chance to intercept the requests before the browser sends it to the server.

This means you can also use them to cache responses you get from the server. Application code doesn’t have to know anything about the cache either! That is a nice separation of concerns if you ask me. Only a small part of the application is concerned with handling offline functionality.

Caching

There are different ways we can go about caching. For javascript and css it is generally best to include a hash or version number in the filename itself. That allows us to set a very long cache tone on those files because if we ever change the code the filename will also change.

That doesn’t work for data though (for example GET requests to a REST endpoint). The URL we use won’t change whenever the data changes. We can cache these types of requests using http response headers.

The cache-control header is used to specify who is allowed to cache the data and how long they’re allowed to do it. This means you can set up separate rules for browsers and CDN (or proxy) servers.

We can also use the ETag (entity tag) header for cache validation and efficient updates. An ETag is a unique value associated with the data we get from our api. That means if the data changes the ETag would also change.

If you are doing cross-domain (CORS) requests you’ll have to configure the server to expose the ETag header. If you forget this step workbox won’t be able to send notifications when the data has changed. How you configure this will depend on what platform you’re using for your server. In ASP.NET core you could do something like this.

options.AddPolicy("ExposeResponseHeaders",

builder =>

{

builder.WithExposedHeaders("etag");

});

The browser (or CDN) can use this header for efficient updates. The first request will receive an ETag header in the response. Future requests will send the value and the server can then determine if the data has changed. If the data is the same the server can respond with a 304 (Not Modified) response. This strategy is particularly friendly to mobile devices.

Workbox

To make caching easier in the service worker I used the workbox library from Google. Workbox hides some of the complexities when working with service workers. One of the things it does is provide methods for easily caching the data our application needs.

Workbox provides several different caching strategies. The strategy we’ll use is the StaleWhileRevalidate strategy. This strategy allows us to get a response from the cache immediately. It will also check with the server in the background to see if the data has changed.

Setting up workbox to use this strategy we need to tell it what url we want to cache and provide a cache name. The cache name isn’t strictly required, but it does make it easier to know what data we are working with.

workbox.routing.registerRoute(

new RegExp("https://mywebsite.com/api/Movies"),

new workbox.strategies.StaleWhileRevalidate({

cacheName: 'movies'

})

);

This configuration sets up a cache for the Movies REST endpoint. Whenever our application makes this request, workbox will immediately return any cached values. It will also go ahead and send the request to the server. If there is no data in the cache, the application will wait for the server to respond.

The benefit to this strategy is that the user doesn’t have to wait as long to see the data they are interested in. The downside is that they could be seeing data that is out of date. While this doesn’t work for all data, it does work for a list of movies that change infrequently.

We don’t have to do anything special in our application code to use this cache! If you were using Axios you would just make a request like normal.

axios.get("https://mywebsite.com/api/Movies")

.then(response => {

displayMovies(response.data);

});

The first time the request is made the promise will resolve after the server returns a response. The second time this request executes the promise will return immediately with the cached response. The browser will then also make a request to the server and update the cache if the data has changed.

We now have an application that feels faster to our users since they don’t have to wait for the server every time. However, we also run into the scenario where we have a cache that has updated data that isn’t displayed to our users. We need a way to let our application know when the cache is updated.

Broadcast Updates

Fortunately, workbox already provides this functionality. We just need to go back to our service worker code and add the broadcast update plugin.

workbox.routing.registerRoute(

new RegExp(`https://mywebsite.com/api/Movies`),

new workbox.strategies.StaleWhileRevalidate({

cacheName: 'movies',

plugins: [

new workbox.broadcastUpdate.Plugin({

channelName: 'cache-update'

})

]

})

);

The plugin will create a BroadcastChannel named “cache-update” that we can use to communicate with our application. Then in our application code we just need to add an event listener for messages on that channel.

if('serviceWorker' in navigator){

const updatesChannel = new BroadcastChannel("cache-update");

updatesChannel.addEventListener("message", async (event) => {

const { cacheName, updatedURL } = event.data.payload;

const cache = await caches.open(cacheName);

const updatedResponse = await cache.match(updatedURL);

if (updatedResponse && cacheName === "movies") {

const movies = await updatedResponse.json();

displayMovies(movies);

}

});

}

This piece of code listens for messages on our “cache-update” broadcast channel. Once it receives a message it finds the correct cache and gets the data by the url we requested. Then your application could update the page with the new data.

Cloudflare

The CDN (Content Delivery Network) I like to use is Cloudflare. They also have a generous free plan that is great for small sites. Using a CDN is beneficial for both site owners and users.

As a site owner, I like that Cloudflare is able to take some of the load off of my server. Many of the requests for data that isn’t tied to a specific user can be cached by Cloudflare and never reach my server.

Cloudflare has servers all over the world. Most often their servers will be closer than the server running the web application. Users will enjoy the lower latency and better user experience

You may be thinking, how does Cloudflare fit in with our PWA caching strategy? We can take our caching strategy one step further. Using Cloudflare we can reduce the number of requests that actually hit our server.

We only need to update the cache when an administrator logs into the portal to change the movie list. Until that happens we’re just fine with everyone getting the cached data from Cloudflare.

To do this we’re going to set a long cache time in our Cache-Control header. Long in this sense is relative and whatever you feel comfortable with. For our example, I’m going to pick a day.

Tip: When you first start wanting the browser to cache data, it is best to start with a short cache time. If you introduce a bug it is much easier to fix than asking users to clear their browser cache.

In our case, I’m not going to ask the browser to cache data at all. We already have our service worker caching data. We only need to ask Cloudflare to cache data for us.

Cache-Control: public, s-maxage=86400Here we state that this cache should be public so proxies such as Cloudflare can cache it. We also specify that they can cache it for a day (provided in seconds). It is important to remember that while the browser will ignore the s-maxage directive, proxies other than Cloudflare could cache the data too.

Now Cloudflare will cache our data for a day before asking our servers for data again. However, what if we want to have Cloudflare update its cache whenever an admin updates the movie list?

Cloudflare provides an API that we can use to clear out their cache for a specific URL. Now whenever an admin updates the movie list we can make a request to Cloudflare to clear their cache. The next time a user tries to download our movie list Cloudflare will go directly to our server and get the new data.

If you really care about speed and are ok with stale data, you can add “stale-while-revalidate” to the cache-control header. This works similarly to what we set up in the service worker earlier. We can specify the number of seconds that Cloudflare can return stale data, but also check with the server to see if any updates are available.

Cache-Control: public, s-maxage=86400, stale-while-revalidate=2592000This configuration means that Cloudflare will immediately return cached data for the first day. If a request is made after the first day and before the stale period timeout, Cloudflare will immediately return the stale data and then check with our server to see if it needs to update its cache.

Cloudflare Page Rule

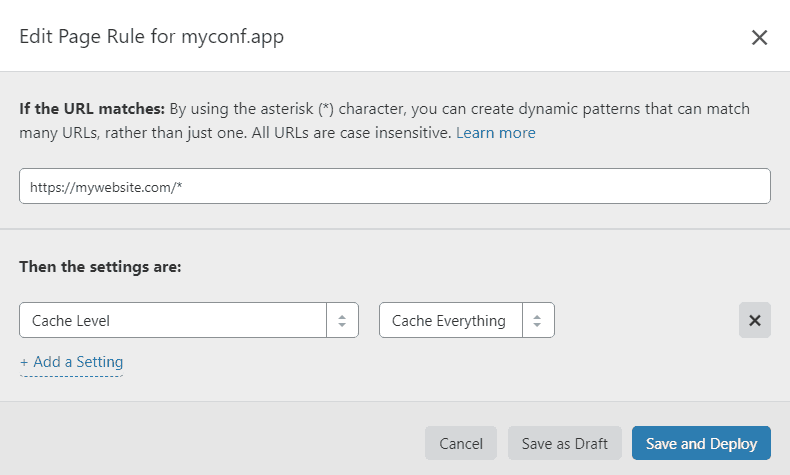

We also need to add a page rule to Cloudflare to make this work. Cloudflare by default only caches files that it expects to be static. Since we’re using this with a REST endpoint it won’t cache our response by default. We can set up a page rule that says they should follow whatever caching rules our server returns. Make sure that you have the correct cache rules for all requests before turning this on. You don’t want to have Cloudflare cache user specific responses.

Cloudflare caching page rule

All we have to do is set up a global page rule that sets the Cache Level to Cache Everything. Then Cloudflare will look at our response headers and follow whatever rule is specified (aka our cache-control header).

That’s all we have to do. We now have an application that can show data to our users when offline, but still show the new data quickly if it’s online.